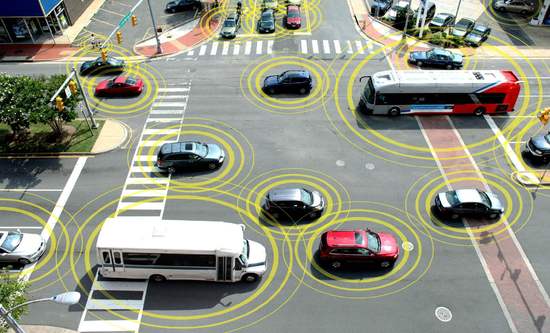

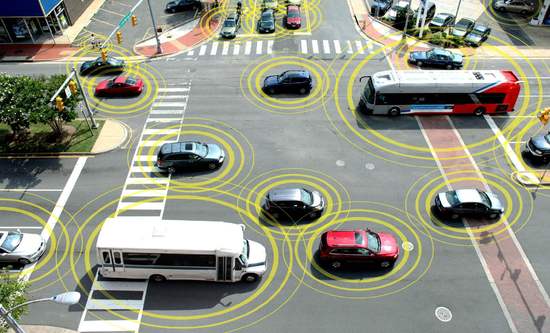

[NetEase Smart News, August 30th] Imagine this scenario: you're riding in a driverless car and suddenly, five people appear directly in front of your vehicle, with no time left for the car to stop. The car could swerve to avoid hitting them, potentially leading to the death of the passengers inside, or it could continue straight ahead, resulting in the deaths of those five individuals. What should an autonomous car do in such a situation? Perhaps the real question should be: what should the programmers instruct the car to do? And more importantly, who decides what the programmers should do?

Social values may determine life and death in such scenarios. In the age of artificial intelligence, every ethical question seems to lead to yet another complex dilemma. Professor Liz Bacon, Vice President for Architecture, Computing, and Humanities at the University of Greenwich in the UK, holds a Ph.D. in artificial intelligence and was once the chair of the British Computer Society. During her recent speech at the International Joint Conference on Artificial Intelligence in Melbourne, she highlighted that debates over the laws governing AI still face numerous intricate challenges.

In the case of the driverless car mentioned earlier, Professor Bacon pointed out that the programming within the car must replace the human thought processes required in similar situations. "A human driver has to make split-second decisions, but for a self-driving car, these decisions must be pre-programmed and evaluated systematically," she explained. "What happens when there are no ideal options?"

"One way to address this is through numerical solutions," she continued. "The car might decide to steer off the road, sacrificing the lives of its passengers to save the pedestrians. This choice depends heavily on the car's AI design and societal values. For instance, the passengers in the car might hold cures for diseases, while the pedestrians outside could be criminals escaping justice."

"But if you factor in societal value judgments, things get problematic," she noted. "If you program the car to prioritize certain lives over others based on perceived worth, the outcomes could be catastrophic. Autonomous cars could have access to vast amounts of data about the people around them, allowing them to make practical decisions. The challenge lies in determining how to program these algorithms ethically."

"Moreover, if you create an algorithm that results in fatalities, could the court view this as premeditated murder?" she questioned.

Bias or Deliberate Harm?

Professor Bacon emphasized that creating self-driving cars is a monumental ethical issue. She also suggested that governments must take the lead in addressing these complex dilemmas. "Governments need to catch up," she remarked, "but I don't think they are doing so effectively. Legislation tends to lag behind technological advancements. Governments should aim to establish relevant laws, but the finer details of decision-making can be left to companies. At the operational level, this falls to middle managers and programmers – where the risk of bias becomes particularly pronounced."

When tasked with programming a self-driving car, one might clash with their employer over how the car should respond to various road conditions. Should the car prioritize passenger safety at all costs, even if it means harming pedestrians? Professor Bacon admitted, "Bias seeps into every stage of production. Everyone has their own moral compass, and it’s challenging to navigate conflicting pressures. If you’re under stress and fear losing your job, it becomes incredibly difficult to stand by your principles."

Hacking and the Fear of "Zombie Cars"

The "Zombie Car" from the movie *Fast & Furious 8* showcased the terrifying prospect of autonomous vehicles being hacked.现实ä¸,美国的黑客æˆåŠŸå…¥ä¾µäº†ä¸€è¾†æ±½è½¦å¹¶æ›´æ”¹äº†å…¶è¡Œé©¶è·¯çº¿,包括自动刹车。"Artificial intelligence can play a tremendous role globally," Professor Bacon observed. "But unfortunately, there are immoral individuals who wish to harm us. In theory, they could hack into a car, drive it off a cliff, or crash it into a wall, making it appear as an accident."

Additionally, Professor Bacon highlighted that while human error causes many accidents today, the introduction of self-driving cars will significantly reduce these incidents. However, it might also introduce new types of fatalities. "A human driver might kill person A, but an autonomous car could kill person B due to its unique algorithmic logic," she explained.

Beyond self-driving cars, Professor Bacon noted that artificial intelligence will permeate other domains, such as warfare. "Soon, we won't send soldiers to war anymore," she predicted. "Instead, we'll deploy drones and robots to participate in battles and launch missiles." Remote-controlled robots fighting on the frontlines could become a reality. This shift alters the nature of war and the decision-making process.

Who to Sue When an Accident Occurs?

Whom do you sue when a loved one is injured by a self-driving car? "This is a major issue," Professor Bacon stated. "Legislation requires government assistance because this is a complex problem. Some discussions have centered on suing robots, but I don't fully grasp how this works since robots lack bank accounts or financial resources. Developing self-driving cars involves multiple stakeholders, and I don't have the answer. But insurance companies must find solutions because they need to know who is liable when accidents occur."

Currently, few people fully grasp the significance of deploying driverless cars. This is a technology that requires public education throughout its development. "Society as a whole needs to understand what artificial intelligence is and how it impacts our lives," Professor Bacon remarked. "Only after understanding these implications can individuals make informed decisions. Trust will be key," she concluded. "No one will adopt self-driving cars unless people genuinely trust this technology."

This article is produced by NetEase Smart Studio (WeChat ID: smartman163). Stay tuned for more insights on AI and the next big era!

Hacking and the Fear of "Zombie Cars"

The "Zombie Car" from the movie *Fast & Furious 8* showcased the terrifying prospect of autonomous vehicles being hacked.现实ä¸,美国的黑客æˆåŠŸå…¥ä¾µäº†ä¸€è¾†æ±½è½¦å¹¶æ›´æ”¹äº†å…¶è¡Œé©¶è·¯çº¿,包括自动刹车。"Artificial intelligence can play a tremendous role globally," Professor Bacon observed. "But unfortunately, there are immoral individuals who wish to harm us. In theory, they could hack into a car, drive it off a cliff, or crash it into a wall, making it appear as an accident."

Additionally, Professor Bacon highlighted that while human error causes many accidents today, the introduction of self-driving cars will significantly reduce these incidents. However, it might also introduce new types of fatalities. "A human driver might kill person A, but an autonomous car could kill person B due to its unique algorithmic logic," she explained.

Beyond self-driving cars, Professor Bacon noted that artificial intelligence will permeate other domains, such as warfare. "Soon, we won't send soldiers to war anymore," she predicted. "Instead, we'll deploy drones and robots to participate in battles and launch missiles." Remote-controlled robots fighting on the frontlines could become a reality. This shift alters the nature of war and the decision-making process.

Who to Sue When an Accident Occurs?

Whom do you sue when a loved one is injured by a self-driving car? "This is a major issue," Professor Bacon stated. "Legislation requires government assistance because this is a complex problem. Some discussions have centered on suing robots, but I don't fully grasp how this works since robots lack bank accounts or financial resources. Developing self-driving cars involves multiple stakeholders, and I don't have the answer. But insurance companies must find solutions because they need to know who is liable when accidents occur."

Currently, few people fully grasp the significance of deploying driverless cars. This is a technology that requires public education throughout its development. "Society as a whole needs to understand what artificial intelligence is and how it impacts our lives," Professor Bacon remarked. "Only after understanding these implications can individuals make informed decisions. Trust will be key," she concluded. "No one will adopt self-driving cars unless people genuinely trust this technology."

This article is produced by NetEase Smart Studio (WeChat ID: smartman163). Stay tuned for more insights on AI and the next big era!

Hacking and the Fear of "Zombie Cars"

The "Zombie Car" from the movie *Fast & Furious 8* showcased the terrifying prospect of autonomous vehicles being hacked.现实ä¸,美国的黑客æˆåŠŸå…¥ä¾µäº†ä¸€è¾†æ±½è½¦å¹¶æ›´æ”¹äº†å…¶è¡Œé©¶è·¯çº¿,包括自动刹车。"Artificial intelligence can play a tremendous role globally," Professor Bacon observed. "But unfortunately, there are immoral individuals who wish to harm us. In theory, they could hack into a car, drive it off a cliff, or crash it into a wall, making it appear as an accident."

Additionally, Professor Bacon highlighted that while human error causes many accidents today, the introduction of self-driving cars will significantly reduce these incidents. However, it might also introduce new types of fatalities. "A human driver might kill person A, but an autonomous car could kill person B due to its unique algorithmic logic," she explained.

Beyond self-driving cars, Professor Bacon noted that artificial intelligence will permeate other domains, such as warfare. "Soon, we won't send soldiers to war anymore," she predicted. "Instead, we'll deploy drones and robots to participate in battles and launch missiles." Remote-controlled robots fighting on the frontlines could become a reality. This shift alters the nature of war and the decision-making process.

Who to Sue When an Accident Occurs?

Whom do you sue when a loved one is injured by a self-driving car? "This is a major issue," Professor Bacon stated. "Legislation requires government assistance because this is a complex problem. Some discussions have centered on suing robots, but I don't fully grasp how this works since robots lack bank accounts or financial resources. Developing self-driving cars involves multiple stakeholders, and I don't have the answer. But insurance companies must find solutions because they need to know who is liable when accidents occur."

Currently, few people fully grasp the significance of deploying driverless cars. This is a technology that requires public education throughout its development. "Society as a whole needs to understand what artificial intelligence is and how it impacts our lives," Professor Bacon remarked. "Only after understanding these implications can individuals make informed decisions. Trust will be key," she concluded. "No one will adopt self-driving cars unless people genuinely trust this technology."

This article is produced by NetEase Smart Studio (WeChat ID: smartman163). Stay tuned for more insights on AI and the next big era!

Hacking and the Fear of "Zombie Cars"

The "Zombie Car" from the movie *Fast & Furious 8* showcased the terrifying prospect of autonomous vehicles being hacked.现实ä¸,美国的黑客æˆåŠŸå…¥ä¾µäº†ä¸€è¾†æ±½è½¦å¹¶æ›´æ”¹äº†å…¶è¡Œé©¶è·¯çº¿,包括自动刹车。"Artificial intelligence can play a tremendous role globally," Professor Bacon observed. "But unfortunately, there are immoral individuals who wish to harm us. In theory, they could hack into a car, drive it off a cliff, or crash it into a wall, making it appear as an accident."

Additionally, Professor Bacon highlighted that while human error causes many accidents today, the introduction of self-driving cars will significantly reduce these incidents. However, it might also introduce new types of fatalities. "A human driver might kill person A, but an autonomous car could kill person B due to its unique algorithmic logic," she explained.

Beyond self-driving cars, Professor Bacon noted that artificial intelligence will permeate other domains, such as warfare. "Soon, we won't send soldiers to war anymore," she predicted. "Instead, we'll deploy drones and robots to participate in battles and launch missiles." Remote-controlled robots fighting on the frontlines could become a reality. This shift alters the nature of war and the decision-making process.

Who to Sue When an Accident Occurs?

Whom do you sue when a loved one is injured by a self-driving car? "This is a major issue," Professor Bacon stated. "Legislation requires government assistance because this is a complex problem. Some discussions have centered on suing robots, but I don't fully grasp how this works since robots lack bank accounts or financial resources. Developing self-driving cars involves multiple stakeholders, and I don't have the answer. But insurance companies must find solutions because they need to know who is liable when accidents occur."

Currently, few people fully grasp the significance of deploying driverless cars. This is a technology that requires public education throughout its development. "Society as a whole needs to understand what artificial intelligence is and how it impacts our lives," Professor Bacon remarked. "Only after understanding these implications can individuals make informed decisions. Trust will be key," she concluded. "No one will adopt self-driving cars unless people genuinely trust this technology."

This article is produced by NetEase Smart Studio (WeChat ID: smartman163). Stay tuned for more insights on AI and the next big era!Shenzhen Essenvape Technology Co., Ltd. , https://www.essenvape.com