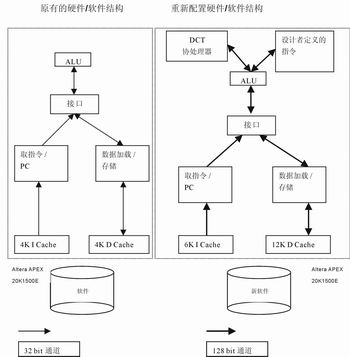

Figure 1 Mediaworks configurable processor architecture evaluation structure, implemented with Altera APEX 20K1500 FPGA.

One way to improve performance is to use common small interface standards, such as PCMCIA cards and CF cards, and the latest PDAs support these interface standards. Both advanced video and wireless processing systems can be developed using the standards of PCMCIA and CF circuit cards. The biggest problem of providing excellent streaming video products for PDA is that the above interface standards cannot meet the high data bandwidth requirements of high-resolution video, and the video data compression algorithm can partially or completely solve the problem. However, streaming video codecs, such as MPEG4, are developed for relatively unlimited bandwidth systems, such as PC processing at several GHz, which cannot meet the quality, cost, power consumption, and performance requirements of a large number of wireless multimedia devices on the market. Therefore, the development of video codecs to accelerate streaming video complex processors has become a key issue.

This article introduces several methods of using PDA to achieve high-quality streaming video. MediaWorks built a configurable media processor, improved the design method based on programmable logic, and developed a set of optimized solutions. The use of a configurable media processor can also enable the hardware and software co-design process, which is critical to engineering productivity and can accelerate the design and development of video codecs with different performance.

PDA devices have screens for viewing graphics and speakers and microphones that resume capturing audio, and usually do not integrate a camera for video capture. Considering the cost relationship, the most economical processors are often used. These processors usually do not support bidirectional streaming video. Therefore, additional processors and compression / decompression hardware and software are required. This article attempts to solve the problem of implementing MPEG4 video capture, transmission and playback in existing PDA devices.

In the design, the first step is to increase the video capture capability, that is, to develop a PCMCIA-based VGA resolution camera using a sensor that can work at a sufficient frame rate and image size. Although most PDAs cannot display complete VGA images, they can be transmitted to PC users via the Internet and the entire image can be viewed. It is easy to develop using the PCMCIA interface standard, and the Compcat Flash specification can also be used as a backup. The basic structure is to transfer all image data to the PDA through the PCMCIA bus, encode the output image with software, and decode the input image.

The initial result is a frame rate of 7-12 frames per second, suitable for QCIF (176 & TImes; 144) images (images seen by local users and images being sent to local users). These results prove the feasibility of this concept. However, we feel that to meet the requirements of delivering images to PC users, the image size should be larger and the frame rate should be faster to make the viewing smoother. The next generation design should involve structural changes to solve the above problems.

Because the video function needs to be added to the PDA, a VGA camera based on PCMCIA / CF needs to be provided. However, in order to increase the video performance of the system, the bottleneck of the PCMCIA bus and the computing performance limitations of the PDA must be resolved. In the design, this goal is achieved by placing the video encoder on the camera side of the PCMCIA interface. According to the image sequence, the encoded video only needs to be less than one tenth of the data rate of the uncompressed video stream. Encoding the video stream on the camera side of the interface bus allows larger video images to be transferred to the PDA while allowing more Video frame.

Encoding requires partial decoding capabilities, so video encoding is more computationally intensive than decoding. Therefore, an coder is developed for VGA cameras with configurable processing technology. This new video architecture is also suitable for next-generation products that add wireless capabilities. Video decoding is still in the PDA's software.

With this new structure, you can get:

* CIF resolution images at 30 frames per second

* VGA images greater than 20 frames per second The current design method is to find the processor that best meets the task requirements, but using a configurable processor such as Altera's Nios or Tensilica's Xtensa, the processor can be customized according to a specific task. The design using a configurable processor has the following steps: First, use the APEX 20KE FPGA development system or instruction set simulator to evaluate the initial software and hardware configuration, and determine the performance bottleneck through the analysis results. The method of solving the biggest bottleneck with hardware, software, or a combination of the two has theoretically passed and is constantly improving. The program has been implemented (parameterized instructions, processor configuration changes, coprocessors, new systems, etc.) and the results are being evaluated. The evaluation result shall confirm the performance improvement. Evaluate; propose a plan; verify the plan through further evaluation, and so on until the hardware / software plan meets performance requirements, as shown in Figure 1. After this process, there may be some inevitable bottlenecks, but it will gradually reach the optimal point.

The configurable processor solution must have the following conditions:

* The processor has a parameterized instruction set.

* The processor has changeable parts such as buffer size.

* The processor has an external or specific coprocessor.

* The processor can run under multi-processor conditions.

* Combination of the above conditions.

Using parameterized instruction processing, developers can use the basic processor configuration to evaluate code and find bottlenecks. A common bottleneck is the lack of cache. With a configurable processor, developers can roll back and reconfigure the processor to provide more instruction or data buffering, or from 2 to 3 to 4 combined instructions. Another type of bottleneck is that too narrow data channels limit the coding efficiency of one or a large number of pixel blocks. With a configurable processor, you can widen the data channel and process the entire row of pixels at once, saving processor cycles. Specific instructions can be created to take advantage of this wider data channel. Taking MPEG4 operation as an example, the calculation of the sum of absolute differences (SAD) can be customized so that 16 independent 8-bit pixel summation can be replaced by a 128-bit simultaneous summation command for all 16 pixel values.

It is also possible to customize instructions for discrete cosine transform (DCT), but it may be better to use a dedicated coprocessor. Using a dedicated coprocessor, the pipelined DCT coprocessor is capable of such work. Software DCT can easily take up 15% to 20% of processor cycles. If the processor does not have the required bandwidth, you can replace the DCT software with hardware at a rate of 60 to 80 MHz.

The multiprocessor design is similar to using a dedicated coprocessor. Because each frame is to be quantized or simulated, video encoding and decoding have serial characteristics. DCT or inverse DCT (iDCT) processes each frame in sequence, so a frame can be transferred from one processor to the next, and each processor performs a specific function. Therefore, the overall frame rate is the frame rate of the slowest processor. With this solution, the initial startup of the processor pipeline will be delayed, and the original encoding / decoding software needs to be redesigned and operated in parallel.

The preliminary results of the above design scheme indicate that the processing cycle has been significantly improved, but further optimization is needed (distributed digital DCT, redesigned structure, reduced intermediate storage, etc.).

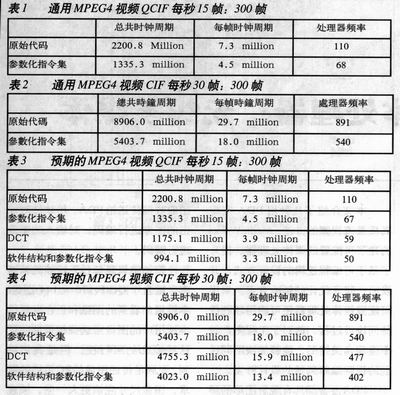

The current results are summarized in Tables 1 to 4.

Once the final design plan is determined, the plan can be ported to an ASIC or converted from an FPGA design to an Altera HardCopy device, which can reduce costs.

This article briefly discusses how to use configurable media processors to solve the problem of reconfiguring existing PDAs with multimedia functions. The key is hardware / software co-design. When developing solutions to eliminate performance bottlenecks, designers need to make trade-offs between possible hardware and software solutions.

UV Germicidal Light Air Purifier

Uv Air Purifier,Uvc Air Cleaner,Uv Germicidal Lamp,Air Duct Uv Light

Dongguan V1 Environmental Technology Co., Ltd. , https://www.v1airpurifier.com